Title of paper under discussion

Shared and distinct neural correlates of singing and speaking

Authors

Elif Özdemir, Andrea Norton and Gottfried Schlaug

Journal

NeuroImage, vol 33 (2006), pp 628–635

Link to paper (free access)

Overview

Scan the brain of someone tackling a musical task and then take a further scan as they tackle a language task. How do those two scans compare? Some areas of brain activity are associated with both tasks and some are distinct to one task or the other. In other words, some brain areas process language AND music, while others process language OR music.

Elif Özdemir and her colleagues at Harvard Medical School were keen to investigate this further, specifically the difference between patterns of brain activity in a singer and a speaker.

Participants were asked to perform in three different ways – sing, hum, or speak -whilst in a brain scanner. Brain activity during each mode of performance was then compared.

When ‘humming’ brain activity was subtracted from ‘singing’ brain activity, the distribution of remaining brain activity was – unsurprisingly – very similar to the distribution of ‘speaking’ brain activity.

Furthermore, when ‘speaking’ brain activity was subtracted from ‘singing’ brain activity, the remaining activity was located primarily in the right hemisphere. This backs up previous research suggesting the left hemisphere is more specialised for language perception and production whilst music is more ‘bihemispherical’ – it’s processed in both hemispheres.

The authors suggest this “may be key to understanding why patients with left frontal lesions can sing the lyrics of a song but cannot speak the words”.

Method

Ten volunteers were asked, in turn, to lie in a ‘functional Magnetic Resonance Imaging’ (fMRI) brain scanner. Rigged up with earphones, they listened to a series of two-syllable words/phrases, each one of which was 1) spoken or 2) sung, with the two syllables a minor third apart. The participant was then asked to immediately repeat the phrase they’d just heard as exactly as they could.

Included amongst these phrases were some that were 3) hummed, a ‘control procedure’ in which the participant “heard the same two pitches used in the singing condition hummed in either an ascending or descending manner, and were asked to repeat what they had heard..”

[Two further control procedures – 4) listening to silence and 5) repeating meaningless pairs of vowels – were also included]

The MRI scanner provided ‘snapshots’ of brain activity. Two snapshots were taken during each experiment, one just before the participant listened to the phrase and one just after repeating that phrase – the difference between these snapshots revealing the brain activity associated with the response. The timing of the listening/repeating within the experiment was varied from trial to trial to ensure that peak brain activity was being captured; and with the scanner being so noisy, the snapshots were as short-lived (short ‘acquisition time’) and as widely spaced (long ‘repetition time’) as possible to minimise such noise interfering with the results.

Results

Each result is presented as a ‘contrast’ in brain activity between two tasks. In other words, the brain activity evoked by one task minus that evoked by another, for example ‘singing vs silence’, or ‘singing vs speaking’.

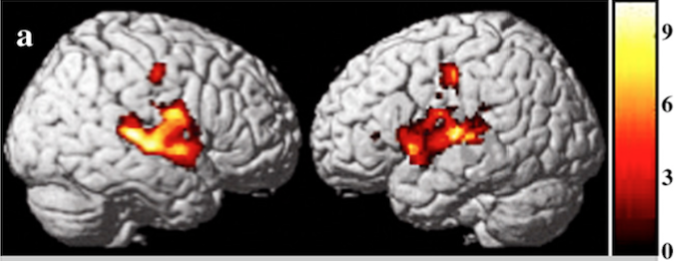

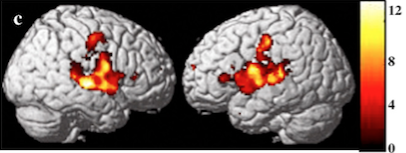

The contrasts below are of ‘speaking vs silence’, ‘singing vs silence’ and ‘humming vs silence’. Each picture depicts the difference in brain activity (on the right, then left, side of the brain).

All these contrasts showed the brain as busier – in both hemispheres – in three regions when singing, speaking or humming compared with being silent: 1) inferior pre- and post-central gyrus (a region involved in controlling the movement of, and sensation from, vocalisation) 2) inferior frontal gyrus (including ‘Broca’s area’, a region linked to speech production) 3) the middle and posterior portions of the superior temporal gyrus and the superior temporal sulcus (regions of auditory processing, including ‘Wernicke’s area’, a region linked to speech comprehension).

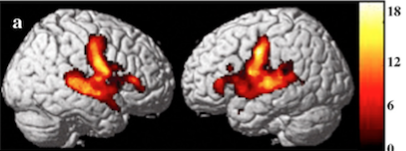

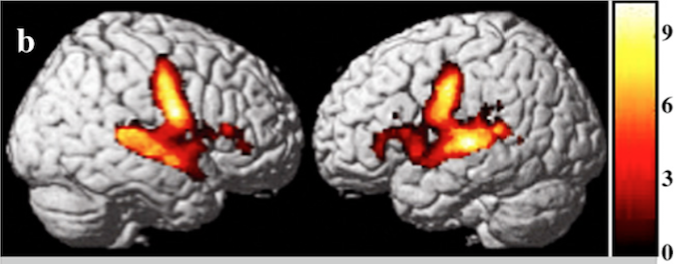

Özdemir and her colleagues then looked to compare ‘singing vs humming’:

and found it resembled ‘speech vs silence’. This, they reasoned, was unsurprising: “Since our singing condition equals speaking with intonation, subtracting the intonation should result in an activation pattern similar to that of the speaking vs silence contrast.”

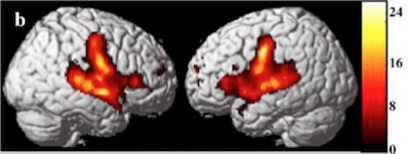

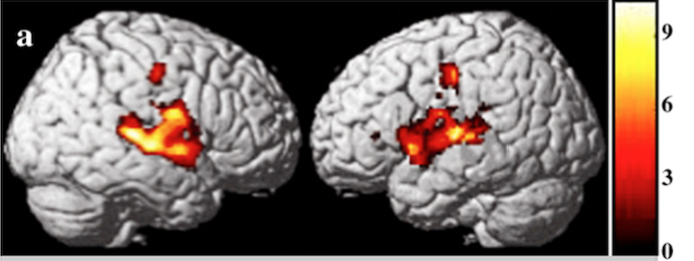

A further comparison, central to their research objective, was ‘singing vs speaking’. This revealed higher activation in, amongst other regions, the mid-portion of the superior temporal gyrus – especially in the right hemisphere:

Discussion

Activity in several brain areas was found to be common to singing, speaking and humming. In the words of the authors: “This pattern of commonly activated brain regions may constitute a shared neural network for the motor preparation, execution, and sensory feedback/control for both intoned and spoken vocal production.”

Of perhaps greater interest were the distinct regions of activation remaining ‘lit’ when singing was compared with speaking. These included 1) “the most inferior portion of the inferior frontal gyrus”, a region of particular interest because it could represent a subdivision of ‘Broca’s area’, itself linked to speech production. Perhaps, suggest the authors, this research has revealed a part of ‘Broca’s area’ to be more active in singing than speaking; and 2) parts of the superior temporal gyrus already known by scientists to be related to more complex auditory tasks, for instance the processing of melodies or timbres.

Remarkably, the right hemisphere of the ‘singing brain’ was more active (especially in its superior temporal gyrus) than the right hemisphere of the ‘speaking brain’. According to the authors this might in part be accounted for by the phenomenon of “chunking”, whereby the “prosodic features inherent in music ( eg intonation, change in pitch, syllabic stress) may help speakers chunk syllables into words and words into phrases, and it is possible that this chunking is supported more by right hemisphere structures than left hemisphere structures.”

Crucially though, these findings overall celebrate the ‘bihemispherical’ activation associated with singing; the higher level of right hemisphere activation is only in comparison to speech, which itself is known to have a left hemisphere bias. Previous studies have tried to generalise towards a clearly hemispheric lateralisation: speaking = left hemisphere, singing = right hemisphere. Our authors argue such generalisations are based on studies wherein the participants didn’t actually sing or speak (‘overtly’); rather they imagined singing or speaking (‘covertly’). In this present study the volunteers sang and spoke out loud – and in doing so evoked strong bihemispherical activation, demonstrating that the lateralisation of speaking and singing is perhaps more nuanced than previously suggested.

That said, the additional activation of the right temporal lobe, alongside other regions, in the singing brain compared with the speaking brain may, in the words of the authors, be the key to understanding “why patients with left frontal lesions can sing the lyrics of a song, but cannot speak the words” and “why some therapies employing intonation techniques have been reported to facilitate recovery in such non-fluent […] patients.”

Coda

Pierrot Lunaire, op 21 by Arnold Schoenberg

Jane Manning – Sprechstimme

Nash Ensemble, cond. Simon Rattle